When in doubt, decompose problems

An algorithm to solve hard problems

One of the most helpful mental tricks I've picked up in tackling product challenges is to recursively decompose problems.

The strategy is pretty intuitive. Continue to break down a problems until you can't break it down any further. Only once you've broken problems down to their smallest part do you brainstorm and test solutions.

It goes something like this...

function decompose(problem p) {

let subproblems = p.subproblems()

if (subproblems == null): // can you break the problem down?

return brainstormSolutions() // brainstorm solutions

else:

return decompose(subproblems) // if so, decompose again!

}By being disciplined about following this algorithm, I believe everyone can improve both the rate, the quality, and the level of ambiguity of problems to solve. Let's explore it a little more and see it in action.

The Problem with Most Problems

The problem with most problems is that they are presented at a level that's unsolvable. It is through decomposing that problem into smaller units that it can be understood and ultimately solved.

Why isn't our business growing faster? Why are our customers churning? Why am I not finding good candidates to hire? It's easy to fall into the trap of guess-and-check, to jump to solutions that could solve this problem. But by systematically breaking down problems into their atomic parts, you're more likely to find the non-obvious solutions to the problem.

When faced with a problem with a ton of ambiguity, unpack the problem into a series of smaller sub-problems. The first step, decompose!

"Our business is not growing fast enough because (a) we are not adding customers fast enough, (b) our churn rate increased last quarter, and (c) we're buried in support tickets."

That's where many — myself included — fall into the Ideas trap. The mind naturally starts to brainstorm ways they can solve these sub-problems.

We can host a webinar! We can add a self-service option! We can make our docs clearer!

Most people will have reasonable ideas about how to solve each sub-problems. And they will probably be right, some of the time. But until you have the map of all the nubby sub-problems, it's still unclear what needs to be solved

But what if we decompose these sub-problems again?

Well, it turns out we are not adding customers fast enough because we're not reaching out to our leads fast enough. And customers are churning at higher rates in Europe because of a new regulation. And our support load is largely driven by non-paying customers who use Advanced Feature #7.

Can we break these problems down any further? If the answer is yes, and that will lead to better insight about the problem itself, then do it. If not, we've achieved problematomicity. We've reached the end of the line, the problems cannot be broken down further. They are indivisible.

At this point, we can begin to brainstorm solutions for the atoms that comprise the larger problem.

Example: OPower

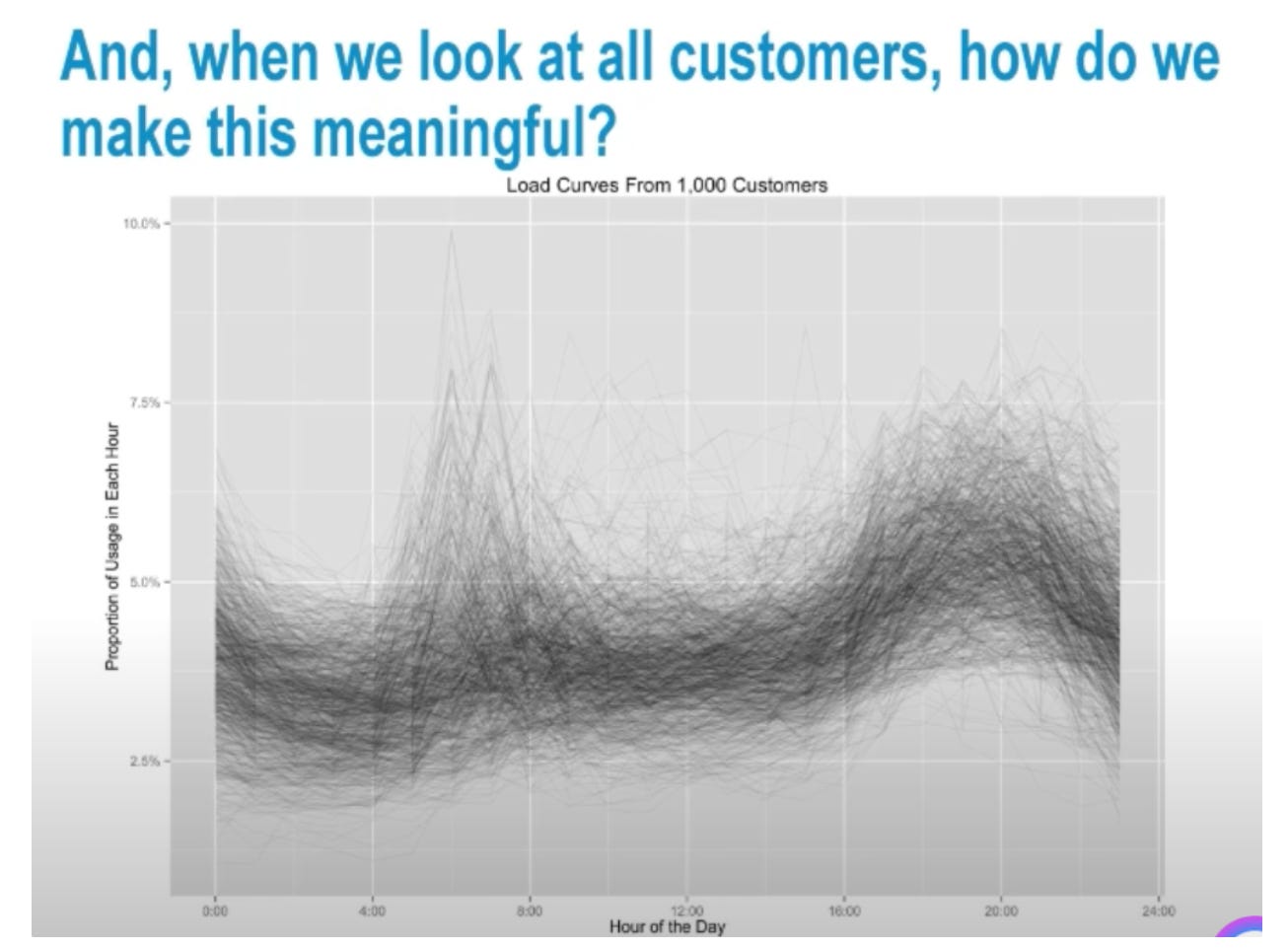

An example of this type of recursive problem solving I saw recently is this 2016 talk by the OPower data science time. I recommend a full watch, but the "problem" they set out to solve: how do you get people to consume less energy at home, to help reduce climate emissions?

Well, to answer that question, they start by understanding how people consume energy. They go out and get the data for the energy consumption patterns of households in an area. You can see this charge below. They refer to this as the "hairball" chart, because it's so overloaded and hard to discern what's really going on.

I find this to be such a great visual representation of an ambiguous problem:

A spike means the household is using lots of power during that period of the day. You can see how power usage falls off at the end of the day, when people go to bed.

But the team at OPower decomposes this problem! By using a clustering algorithm, they find a few common usage patterns in the data:

They decompose the "hairball" into 5 archetypes. With these different archetypes in hand — the night owls who use energy at night, the twin peaks who consume before leaving for work/school and when they return — they decompose again!

They figure out what drives usage for each of the household archetypes. For example, the Twin Peaks archetype using energy in the morning and at night. This typically aligned with working families, getting the kids ready for school and making dinner at home after work. But an archetype like the Steady Eddies were more likely retired or stay-at-home, using energy evenly throughout the day.

They decompose the "hairball" into 5 archetypes. With these different archetypes in hand — the night owls who use energy at night, the twin peaks who consume before leaving for work/school and when they return — they decompose again!

They figure out what drives usage for each of the household archetypes. For example, the Twin Peaks archetype using energy in the morning and at night. This typically aligned with working families, getting the kids ready for school and making dinner at home after work. But an archetype like the Steady Eddies were more likely retired or stay-at-home, using energy evenly throughout the day.

By unpacking the hairball chart into a series of sub-problems, OPower was able to solve their top-level problem by delivering targeted solutions that aligned with the sub-problems they came across in their research.

Common failure modes

So why do people exit the loop early, and settle for solutions before understanding the full breadth of the problem. The common failure modes I've experienced...

Idea Trap: you get impatient and jump to solutions too early. This can be solved by being disciplined about following the algorithm, and getting others opinions on whether you've reached the deepest depths of the problem space.

Data isn't readily available: breaking down problems often requires exploring data. Data can be unavailable, low-quality, untrusted, or hard to collect. This can add additional step to the recursive loop. In the Opower example, the first step was actually collecting the meter data to understand how people use electricity throughout the day.

Lacking context: Decomposing problems in meaningful ways often requires high context. Do you break a problem down by geo or industry-verticals? should you focus on supply-side or demand-side? Context drives good hypotheses that accelerate problem decomposition. Lack of context means you have to explore more lower-probability hypotheses.